tl;dr: Human centred AI (or HCAI) is a paradigm, similar to human centred design, that contains methods, tools, and principles to ensure AI is designed with humans at its heart, that it’s usable, accessible, safe, and trustworthy. It’s aimed to give designers, researchers, technologists, developers, entrepreneurs a framework to achieve these goals.

Note: This article is about design and written by a designer. I don’t pretend to know anything about actually developing AI models or even AI products. I do know how to make great products though.

Why is Human Centred AI important?

Let’s start with a short story. Your company decides to build a proprietary GPT wrapper that’s secure and trained on your company data. They go ahead and build it and for some unexplained reason no one is using it. It’s down on all metrics and people still insist on using OpenAI or Google variants on their phones rather than engage with the tool your company spent millions developing from scratch.

What’s going on?

Eventually someone somewhere decided to do some user engagement and find out. Turns out that people hate it. You can’t edit your prompt, you can’t stop the system from generating its answer mid-flight, and the output it does produce is riddled with errors and is probably a bit racist. Most infuriatingly, it doesn’t even solve the user need for fast, reliable answers about your company policy.

Oh no! Should we have engaged with users from the start?

Let’s look at another side of the spectrum. Human-centred designers and researchers come on scene early. They run workshops with stakeholders and users to reach consensus on what the system should do, find all the current frustrations people are experiencing, and actually it turns out that no one has any issues with getting accurate answers quickly. The real problem is in the minutiae high-effort tasks, like doing desk research or consolidating multiple reports into one summary.

The scope is tweaked, some money is saved, and the team proceeds to build a focused, single purpose chatbot that does desk research and writes research summaries.

The team puts in clear measures that show the product is moderately successful and saves people a bit of time every day. Everyone is happy.

What the research is saying

In our previous research we spoke to several individuals around Manchester area to understand their usage of generative AI at home. The main theme was that people use it for small and low-risk tasks. Finding recipes, writing short, fun poems in another language, writing a complaint email, that kind of stuff.

In other, “real” academic research HCAI is all about developing models that don’t kill us all or turn the world into a dystopian Matrix-esque nightmare. There are things there we can adapt for our purposes.

There is a lot of academic writing on the topic out there. I checked. Most of it deals with the development of AI, but some of it is useful in the design context.

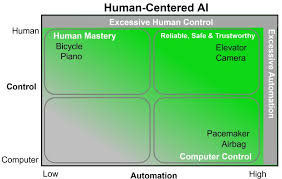

Ben Shneiderman wrote Human Centred AI in 2020 and an updated edition in 2022. It described the need for involving humans in the AI development process. However, it also showed several frameworks for AI-Human interaction. Like this one:

It shows the level of automation and control over various objects. When designing products, it’s worth keeping in mind features that go into the “Excessive” buckets. That GPT wrapper I described earlier and that you couldn’t stop? Excessive automation.

In another paper, Xu Wei proposes a methodological framework that’s rather complicated and geared towards developers, but it proposes several principles, that I adapted in our work at Deloitte.

Human centred AI principles

Principle 1: Usable

The AI must be usable. Duh. You ever used an AI tool that was a pain to use? I had this experience with many an “internal proprietary AI tool” across industries and clients. You want to upload a document? Click on this button. Then that button. Then wait. Then click to “Add file to chat”. Disgusting.

Methods to consider

- Usability testing

- Accessibility testing

- Co-design sessions

Principle 2: Useful

You ever used an AI tool and thought, “why is this here?” Remember the failed WhatsApp chatbot? I asked it, “why are you here?” and it told me it’s there to answer questions and be a buddy. How is it different to clicking on an app in your phone then? No different. What’s the USP? It’s in your WhatsApp. We are obliged to design tools that actually answer a need. A product without a need is just an expensive failure.

Methods to consider

- Visioning workshop

- Process mapping

- Service blueprinting

- User journey mapping

- Prioritisation frameworks (ICE, MoSCoW)

- Decision trees

Principle 3: Trustworthy

How do you know you can trust the chat’s output? We know generative AI can be biased and error prone. There are ways we can increase this trust. There is a neat list of AI patterns out there on the Shape of AI website. Here are some of my favourite that I’ve used in the past.

Patterns to consider

- Caveat (AI responses can be inaccurate)

- Consent (Do you consent us using this data for training?)

- Watermarks (AI generated)

- Showing your work (not on Shape of AI but Perplexity and GPT5 are doing this now)

Principle 4: Scalable

Scalable can relate both to the techy under-the-hood but also to the product itself. A product that requires constant monitoring and is prone to errors is not scalable. A product that fits seamlessly into users everyday workflows is. A workflow is predictable, debuggable, and scalable. A loose cannon agent that makes decision on its own is none of these things.

Patterns to consider

- Workflow mapping

- Business process mapping

- Service blueprinting

- User journey mapping

Principle 5: Responsible

Oh ethics, my favourite topic. How do we make sure the AI isn’t bad? How do you make it ethically sound but also use it in a safe and ethical way? There are lots of issues there. The black box problem, the biases, the errors, the AI psychosis, people using AI in unethical ways to fudge experiments with synthetic data… the list goes on and on.

Mitigation strategies to consider

- Human review of training data

- Publish guidelines and policies for safe AI use

- Human review of outputs

Principle 6: Empowering

Closely related to it being useful, this goes one step beyond. What’s the point in creating an expensive tool that doesn’t only help people but also empowers them to be better? It’s a debate between augmentation and automation. Automation makes a task easy, augmentation allows people to do things they never thought possible. To do this you really need to understand human needs and find ways to create a symbiotic relationship.

Methods to consider

- In depth interviews

- Co-creation workshops

- Service blueprinting

Principle 7: Controllable

My pet hate. Just add that “Stop” button in! It goes beyond that of course. How do we create an AI that keeps the user in the driving seat? That doesn’t do anything unexpected but also has enough automation to be useful? It’s a fine balance and goes back to Ben Schneidermann’s automation vs. control diagram above. There is a beautiful blog that goes into a lot of detail on this topic here.

Methods and patterns to consider

- Operator Event Sequence Diagrams (OESD)

- Various toggles to control variables in an output (e.g., MidJourney has a detail toggle)

- Stop button (obv!)

Conclusion

Human centred AI is the next evolution of human centred design. It’s not a paradigm shift, per se, but more of an update for the age of AI. The tools are generally similar: talk to users. If you’d like to dive into the topic further here’s a list of reading materials that are worth your consideration: