I’ve been thinking about this over the weekend. We spent the last two years feverishly trying out approaches to make research analysis easier for ourselves. First we tried simply dropping in all our interview transcripts into the chat and let it analyse it. When that didn’t work, and honestly resulted in more hallucinations than anyone thought possible, we switched tact and tried to chunk the data. We experimented with aligning transcripts to interview questions. We even, at one point, added interview transcripts to a CSV file, pre-processed it, and created a JSON output to make them more “LLM friendly”. Every time something was missing.

LLMs are inherently black boxes of magic. Stuff goes in, complicated statistical stuff goes on, AI slop comes out. Sure, they’re useful in their own small way to write stuff that sounds like it was written by AI, or summarise some high level stuff.

Slight tangent. I recently read some _insights_ created by an LLM model from a bunch of transcripts. Turns out that data scientists and analysts _need_ to process data. Blew my mind.

Back to our black boxes of magic. The point is, no one, even the developers, really knows what’s going on inside that black box of an LLM. Once the training data is in, that’s it. It goes and does stuff. And that is a problem.

How can we trust anything the LLM produces if we can’t explain it?

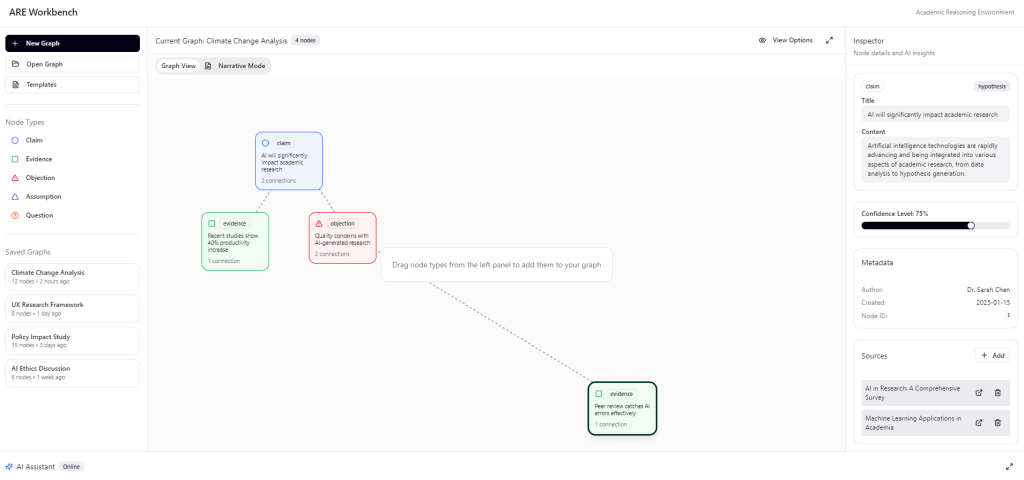

So here’s my humble contribution to the field of Explainable AI. I present to you the first epistemologically transparent cognitive dashboard:

If you’ve ever seen a debating or argument building software, it’s pretty much that. I’m not reinventing the wheel here. The difference is in who the users are. I envision mini-agents building logical strings of arguments to support any claim. Think of it as a cognitive assistant to make research more traceable. It’s AI-powered in a sense that mini-AI-agents are spinning their wheel working on a single piece of evidence at a time.

You upload your sources, the little swarm of agents go and break your sources into pieces, process them, tokenize them, and build a shared context together. Then you, the user, can interrogate the data, make claims, ask the AI to build argument graphs to support your arguments, and so on. Suddenly we’re using LLMs to help us construct knowledge and logic, rather than mindlessly processing context windows to spit out a hallucination of what the truth might be.

As ChatGPT sycophantically praised my idea as a paradigm shift in human cognition… yeah… no… I still couldn’t help but think how neat it would be to have traceable argument chains that show how the LLM came to this or that conclusion.

Perhaps we can use it in research repositories, perhaps in academia, policy, strategy. Perhaps we can stop the decline of critical thinking in schools by saying, “cool, you want to use AI? Great! Bring me an AI written paper with epistemological transparency. Show me how you got the answer!” Wouldn’t it be neat?

You could dive into sources to inspect citations, contradictions, and epistemic status of a node. You could mess around with all sorts of fun meta-data that your helpful swarm of agents helpfully made for you. It’s not really NotebookLM, or Obsidian. It doesn’t just take your notes and spits out things it knows about them. It’s a structured reasoning tool that makes LLMs less of a black box of magic.

So… I guess this is a call to action. It would be great to connect with researchers, engineers, just people who care about epistemic transparency of LLMs. Honestly, if I could build this I would use the crap out of it as a research repo and analysis tool.

Drop me a line! We can get the MVP out in no time 😉